The Good (Synthetic Data Weighted), the Bad (Un-weighted and Un-systemic), and the Ugly (Outcome)

Synthetic data, real decisions, and the humans caught in between. — When unweighted models hit the real world too hard.

The Robot at the Shoreline

It’s August. Few things match the joy of walking along a Mediterranean beach, where the waves touch your ankles and the mind drifts.

Suddenly, a question: could a biped robot walk here?

Why would it even try? Maybe it shouldn’t. Or maybe it should.

Maybe it’s a Bicentennial Man — trying to understand what it means to feel. Or just a simple service bot, assisting someone elderly on their stroll.

Still — walking at the shoreline is a mess.

Two legs. Shifting weight. An unstable center of gravity.

Sand consistency changes step by step. The slope is never constant.

Trajectories are unpredictable.

It’s chaos with waves.

Training a biped robot in such a setting with real-world data? Economically insane.

We’d simulate everything. Inject synthetic data. Validate later.

But can we really trust the simulation?

The Good (Synthetic Data Weighted)

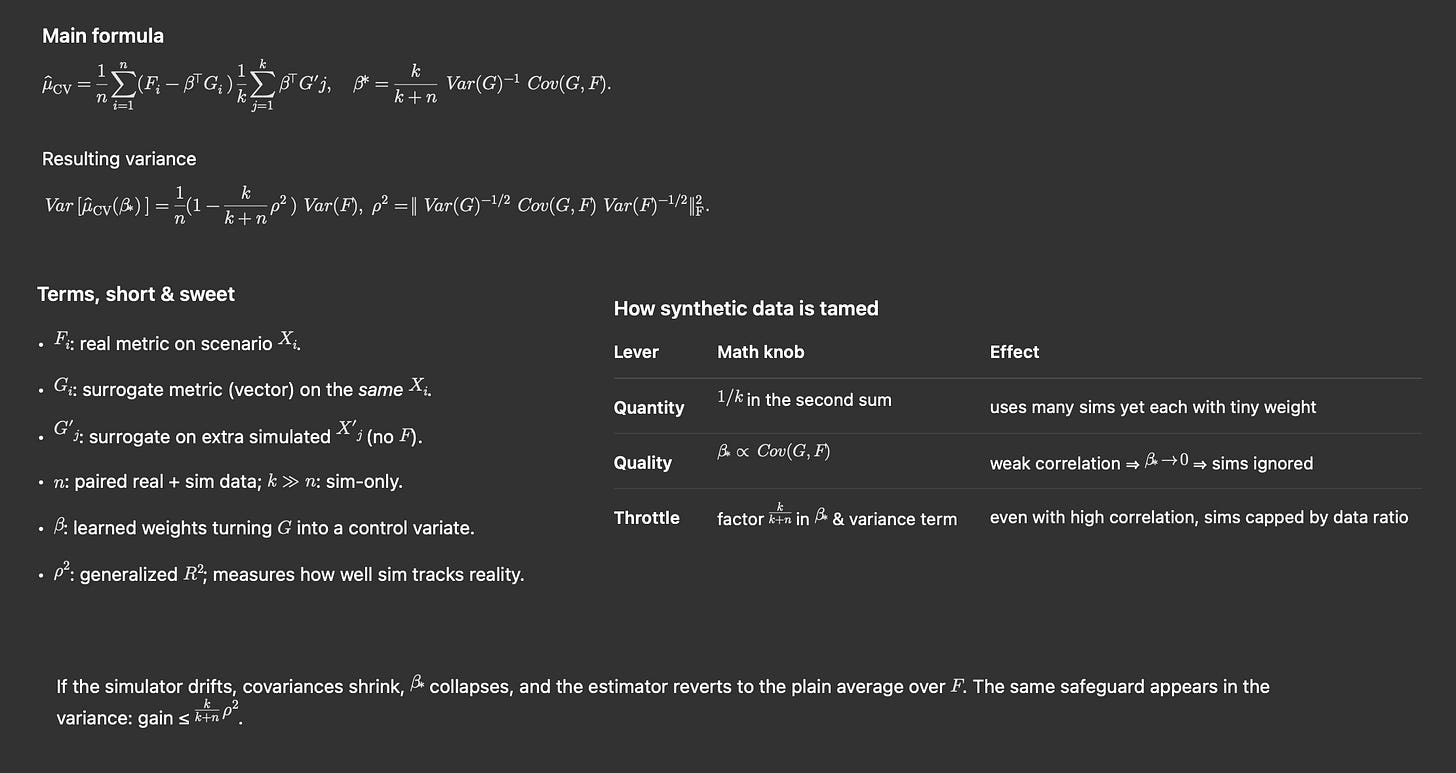

A recent paper from Stanford and NVIDIA offers a formula worth noting.

It doesn’t try to replace reality. It helps us read it better.

It uses correlation between synthetic and real data to improve estimates.

If they’re aligned, synthetic data helps.

If not, the system knows — and discards it.

No manual thresholds needed.

Synthetic data helps only when it is both abundant and strongly correlated with reality; otherwise the math automatically sidelines it.

Here’s the key:

If simulation and reality diverge, the control parameter tends to zero. Simulation loses weight.

If they match, the control parameter approaches one. Simulation becomes useful.

It’s not a shortcut. It’s an adaptive correction.

Most importantly, it introduces a crucial design principle: Trust in simulation is not binary — it’s a continuum.

The Bad (Simulation Unweighted)

There’s elegance in math-guided AI.

But most enterprise AI deployments kill that elegance instantly.

They fall into a meat grinder of consulting firms, spec-implementers, and cost-cutting execs chasing headcount reduction. The goal? Just lower costs, faster workflows, and fewer messy interactions.

"Anything that can go wrong will go wrong.". (Cit. Murphy Law) — None of us — writer or reader — really had time to adjust to the radical shift we’re living through.

Some of you may have read — or heard from a friend — the story about AI systems spotting scratches on rental cars. Maybe it was just one case. Maybe not.

Let’s try a bit of critical imagination.

Here’s how things might have gone down.

Rental car companies are always looking for new ways to automate.

The goal: remove humans from every corner of the process.

A consultancy — one that won’t stop talking about AI — spots an opportunity: the post-rental inspection. It sounds Perfect.

It automates a critical workflow for the company, and it frees the customer who just wants to drop the keys and leave. Throw in a few massive high-res scanners and the pitch is ready: A scalable project to clone across all major rental companies.

Even better — each one gets to “own their custom AI model.”

And of course, training it comes with a price tag.

Triple Jackpot!

Some time later, you rent a car. After a few days of adventure, you bring it back.

It’s a seamless fully automated return process. Just a key dropbox and a couple of taps in the app. No human contact. Perfect (Again!).

Or so it seems... until the ping hits your phone: $500 charged.

The system detected a scratch. You haven’t noticed it. And actually no other human noticed it. Because there was no human review. It’s just an “AI scanner printing a bill”.

Here’s what broke. (Is still critical imagination)

The model wasn’t trained purely on real-world data. Too expensive.

Instead, it ingested piles of synthetic images.

Some were just Photoshop jobs — fake scratches added by hand.

All of it dumped into the model.

No weighting. No correlation checks. Just blind training.

The system is fully automated: from detection to billing, with no human in the loop.

If customers’ complains rise, somebody will moderate it later

As if patching bad outcomes post-factum is how you build trust.

Good thing this is just a scratch.

Imagine if it were a nuclear plant or an aircraft carrier.

The problem isn’t the AI.

It’s the mindset — inherited from software, where bugs could be patched downstream.

But now we’re past that.

Used in this way, AI isn’t like any beta version. It’s deployment.

And deployment hurts people.

The Ugly (Outcome)

Let’s unpack what really went wrong.

Synthetic data was unweighted. The model hallucinated.

The tool was standalone. Not integrated. Yet its decisions hit the real world.

This is how automated injustice is born:

You treat the data as real, even when it’s fake.

You remove the human from the loop. And you let a scanner + model decide on its own. The result? A closed, Arrogant, Unaccountable system.

Just … unweighted output, detached from meaning.

An AI turned into a hammer — swinging blindly, hitting because it can

Now imagine something better.

You’re a Gold member. The AI spots a minor scratch — not even 100% sure it’s real.

A ping hits your phone: “We detected a minor issue. Thanks to your Gold status, we’ve taken care of it. No action needed.”

You feel protected. You stay loyal.

And you still don’t know — Was the scratch even real?

Epilogue: Living in the Valley

I live between Stanford and NVIDIA.

I’ve met some of the authors of that paper. I’m lucky enough to have a mathematician on speed dial (thx Luca!) when formulas get tricky — or to sip Amaro in a backyard while debating ethics in AI with a Big Tech director.

But it always boils down to one question:

What are the systemic conditions that allow AI to operate in the real world?

Firing a missile. vs Issuing a bill.

How can actions so different end up carrying the same perceived level of responsibility?

We can’t afford futures where every module is “technically correct” but the system still generates injustice. Futures built by brilliant engineers so deep in local optimization they forget to ask:

“If this decision is wrong, who pay/suffers?”

This is exactly the kind of situation where engineers need a metaphor-reminder at hand. Like in The Good (Synthetic Data Weighted), the Bad (Unweighted and Unsystemic), and the Ugly (Outcome).

Because in the real world, ethics alone won’t cut it — and our engineers need math, not words.

The paper — “Leveraging Correlation Across Test Platforms for Variance‑Reduced Metric Estimation” — is signed by heavyweights: Marco Pavone, Edward Schmerling, and others across Stanford, NVIDIA, and Georgia Tech.

Their formula embraces the real-world messiness. Built to doubt. To rebalance.

To remind us:

Simulation is NOT reality.

Design ISIN’T math.

But sometimes, math is design.

——

P.S. As some of you know, for the past few months I’ve taken on the role of Chief Product Designer at D.gree — a company turning superyachts (yes, the kind Italy is known for) into sea-robots with unprecedented capabilities.

Features like assisted docking aren’t tech demos. They’re critical scenarios, where every decision depends on a system that can truly see and understand.

You need real-time data. A deep knowledge of the vessel.

Live analysis of the pier, floating obstacles, sea conditions — and the exact position of every human involved in the maneuver, including a double-check on their presence and field of view at the controls.

This is not a context where you can Photoshop a scratch and feed it to a model.

The risk is real. And the return on investment must be systemic, generalizable, scalable.

A walk along the shoreline with a 120-meter robot, four decks high.

Super yachts? We should catch up over a coffee or something.